The past year at Microsoft has been interesting to say the least! I’ve participated countless customer conversations, workshops and architecture design sessions. During these customer interactions, Data Mesh has often been a central subject of debate.

What I’ve learned is that data mesh is relatively a new subject. It’s a concept that must be shaped by companies’ unique ownership, organizational, security, pace of change, technology and cost management requirements. It’s not easy to balance all of these requirements, so companies pick a common architecture to match most of these requirements.

In this blog post, I want to share different architecture design patterns and the considerations for choosing each. I’ll explain what drives companies and why they favor one design over another.

Scenario 1: Fine-grained fully federated mesh

The first design pattern is how Zhamak Dehghani describes data mesh in its purest theoretical form. It’s fine-grained, highly federated and uses many small and independent deployable components. The figure below shows an abstract example of such an architecture design.

In the model above, data distribution is peer-to-peer, while all governance-related metadata is logically centralized. Data in this topology is owned, managed and shared by each individual domain. Domains remain flexible and don’t rely on a central team for coordination or data distribution.

In the fine-grained and fully federated mesh, each data product is approached as an architectural quantum. In simple words: it means that you may instantiate many and different small data product architectures for serving and pulling data across domains. An image of how this would work you find below.

The fine-grained and fully federated mesh enables fine-grained domain specialization. It offers organizational flexibility and fewer dependencies because the interaction is many-to-many. Finally, it promotes intensive reuse of data since there’s a high degree of data products creation. Each data product becomes architectural quantum.

Although the fine-grained and fully federated mesh looks perfect, when companies look deeper, they raise concerns. First, the topology requires conformation from all domains in terms of interoperability, metadata, governance and security standards. This process of standardization on these dimensions doesn’t happen spontaneously. It’s a difficult exercise and requires you to break political boundaries. It requires you to move your architecture and way of working through the different stages pre-conceptualization, conceptualization, discussion, writing and implementation. You need to have critical mass and support from the wider audience.

Second, companies fear capability duplication and heavy network utilization. Many small data product architectures dramatically increase the number of infrastructure resources. It can make your architecture costly. The granularity of data product architectures also becomes problematic when intensively performing cross-domain data lookups and data quality validations. Data gravity and decentralization don’t go hand in hand.

The fine-grained fully federated mesh is an excellent choice, but hard to achieve. I see this topology often at companies that are born on the cloud, do multi-cloud, are relatively young and have many highly skilled software engineers. This topology also might be a good choice when there’s already a high degree of autonomy within your company.

Scenario 2: Fine-grained and fully governed mesh

To overcome the federation concerns, I see many companies adjusting the previous topology by adding a central layer of distribution. While this topology doesn’t implement a full data mesh architecture, it does adhere to many data mesh principles: each domain has a clear boundary and autonomous ownership over its own applications and data products, but those data products are required to be distributed via a central logical entity.

The topology, that is visualized above, addresses some concerns as observed in the fine-grained fully federated mesh. It addresses data distribution and gravity concerns, like time-variant and non-volatile considerations for large data consumers. Domains don’t need to distribute large historical datasets because data is persisted more closely together on a shared storage layer.

Companies also feel this topology easier addresses concerns of conformation. For example, you can block data distribution or consumption, enforce metadata delivery or require a specific way of working. The multiple-workspaces-shared-lake design is a best practice which is applied often. Domains manage and create data products in their own workspaces, but the distribution to other domains happens via a central storage layer using domain-specific containers.

Beside offering a central storage layer, I also see companies providing centrally managed compute or processing services. For example, domain specific transformations are applied within each domain using dedicated compute but processing historic data is done centrally using shared pools of compute resources. Such an approach drastically reduces costs.

The stronger centralization and conformation from this topology is also a consideration. It will lead to a longer time to market and introduces more coupling between your domains. Your central distribution layer could hinder domains from delivering business value when capabilities aren’t ready. I also expect this topology to be more difficult on multi-cloud, because it requires you to design a central and distributed logical entity that seamlessly moves data around, while adhering to all governance standards. Implementing this using cloud-native standards is difficult given the nature what cloud vendors offer today.

Nevertheless, I see many companies choosing a fully governed mesh topology. Most financial institutions and governments implement this topology, but also other companies that value quality and compliance over agility.

Scenario 3: Hybrid federated mesh

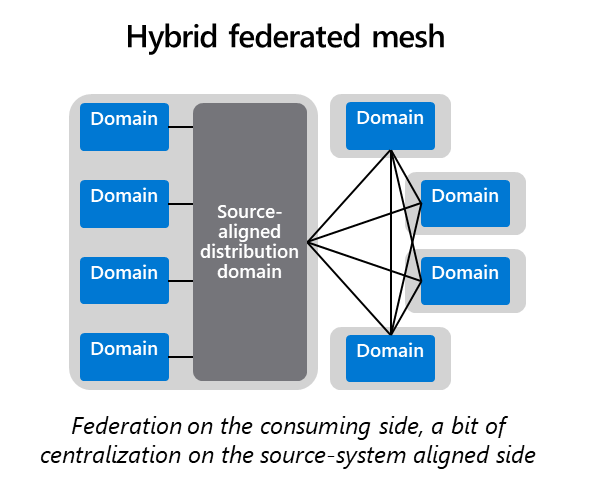

Not all companies have the luxury of having many highly skilled software engineers. Others are settled with outdated legacy systems that are hard to maintain and extract data from. Companies that fall into these categories often implement “some” data mesh, like visualized in the image below.

What characterizes this topology from other topologies? There’s less federation and more centralization. There’s a data product management mindset, but a central platform instance in which data products are being maintained and created. Sometimes, in situations when a domain team lacks necessary skills or resources, there’s even an enabling or platform team taking ownership for data products.

On the consuming data, there’s typically a higher degree of autonomy and mesh-style distribution. Different teams might work on various use cases and take ownership for their transformed data and analytical applications. The outcome or newly created data products are distributed peer-to-peer or pushed back into the central platform.

A consideration for this topology is increased management overhead for source-system aligned domains, as they’re likely to be onboarded by a central team. Organizations that use this architecture are likely to suffer from different operating models and more complex guidance and principles. There also might be inconsistent rules for data distribution because consuming domains often become providing domains. Extra considerations and a governance model for implementing this architecture can be found here: https://towardsdatascience.com/data-mesh-the-balancing-act-of-centralization-and-decentralization-f5dc0bb54bcf.

Scenario 4: Value chain-aligned mesh

Organizations that specialize in supply chain management, product development or transportation greatly respects their value chains. What characterizes these companies? That they require hyper specialization or stream-alignment for bringing value to their customers. These value chains are often tightly inter-connected and operational by nature. They also typically process data backward and forward: from operational to analytical, back to operational.

In this value chain structure, a value chain is considered as a group of smaller domains that closely work together. Such value chains require higher levels of autonomy and therefore can be seen as larger domains. In such a topology, you typically see differentiation between inner- data product and cross-domain data products. Only when crossing the boundaries of a value chain, there’s adherence to central standards. You could also allow or mix different type of governance models; strict adherence in one domain, relaxed controls in other domains.

The consideration for using value chains is that this model requires stronger guidance from architects because boundaries might not be always that explicit.

Scenario 5: Coarse grained aligned mesh

Some organizations gained scale by growing organically, through mergers and acquisitions. These organizations generally have complex landscapes with sometimes hundreds or thousands of applications and systems. Within these complex structures, you see different levels of governance, alignments and decompositions. Some structures might stand on its own and operate autonomously, while others are more integrated.

The structures observed within these large-scale companies are typically quite large. Domains are considered to hold tens or hundreds of applications. Such a topology is what I consider to be a coarse grained aligned mesh.

The difficulty with this topology is that boundaries aren’t always that explicit. Data isn’t aligned with the boundaries of domains and business functions. More often domain boundaries are based on organizational or regional viewpoints that are relatively large. This might create political infighting over who controls the data and what sovereignty is required.

Another challenge with the coarse grained data mesh topology is capability duplication. It’s likely that each coarse grained domain uses its own data platform for onboarding, transforming and distributing data. This approach of implementing many platforms for larger domains leads to capability duplication. For example, data quality management and master data management are likely to be needed across the enterprise. Such a federated approach might lead to many implementations of data quality and master data management on all data platforms. Ensuring consistent implementation of these services across all platforms requires strong collaboration and guidance. The larger your organization is, the harder it gets.

This multi-platform approach often raises many questions: How to calibrate data platforms and domains? Are larger domains allowed to share a data platform? How to deal with overlapping data requirements when the same data is needed on different platforms? How to efficiently distribute data between these larger domains and platform instances? A good starting point is making a proper domain decomposition of your organization before implementing data mesh. Continue with setting application and data ownership. Carry on by formulating strong guidance on data product creation.

When considering the coarse grained mesh topology, it’s important to strongly guide a transition to data mesh. This topology characterizes a higher level of autonomy, and therefore needs stronger governance policies and self-service data platform capabilities. For example, registering and making a data product discoverable might require specific guidance based on the technology and interoperability choices by the domains. If not, visibility across platforms can be poorer or costs can increase based on the proliferation seen in domains.

The coarse grained autonomy contradicts with a true data mesh implementation. It introduces risks that data is combined and integrated, before data products are distributed. It risks creating larger silos. These large monoliths create extra coupling between systems. They also introduce data ownership obfuscation because data products are distributed by using intermediary systems, which makes it harder to see the actual source of origination. To mitigate these risks, introduce principles, such as only capturing unique data and capturing data directly from the source of origination using its unique domain context.

Scenario 6: Coarse grained and governed mesh

Some large-scale and complex organizations aim to overcome complexity, peer-to-peer distribution and interoperability deviation by building a central layer of distribution that sits between these larger structures.

In this topology, teams or organizational structures agree on a distribution platform or marketplace through which data products can be published and consumed. This topology adds some of the characteristics of the governed data mesh topology, such as addressing the time-variant and non-volatile concerns. On the other hand, it allows for more relaxed controls within these larger boundaries.

All of the coarse grained approaches, just like the previous scenario, require high maturity from the data platform team. All components need to be self-service and must integrate well between the central layer of distribution and domain platforms. Metadata management, governance standards and policies are crucial. If the central architecture and governance team has no strong mandate, these architectures are subject to fail.

Conclusion

Data mesh is a shift that can completely redefines what data management means for organizations. Instead of centrally executing all areas of data management, your central team becomes responsible for defining what constitutes strong data governance and what makes a self-service data platform.

In the transition to federation, companies make trade offs. Some organizations prefer a high degree of autonomy, while others prefer quality and control. Some organizations have a relatively simple structure, while others are brutally large and complex. Creating the perfect data architecture isn’t as easy, so for your strategy, I encourage you to see data mesh as a framework. There’s no right or wrong. Data mesh comes with best practices and principles. Some of these you might like, others you don’t. So, implement whatever works best for you.

Fuente: www.towardsdatascience.com