How the next wave of intelligence will be achieved.

Machine learning has come a long way from the 1950s when statistical methods for simple machine learning algorithms were devised and Bayesian methods were introduced for probabilistic modelling. Nearing the twentieth century, research into models like support vector machines and elementary neural networks exploded with the discovery of backpropagation, based around Alan Turing’s progress with computers. Several years later, the availability of massive computation has given way to massive neural networks that can beat world champions at Go, generate realistic art, and read. Historically, progress in machine learning has been driven by the availability of computational power.

As the drive to make computer chips in classical computing becomes more and more powerful begins to dry up (bits nearing the smallest molecular size they can be), machine learning development can no longer rely on a steady growth in computational power to develop even more powerful and effective models. Machine learning, in response, is turning to compositional learning.

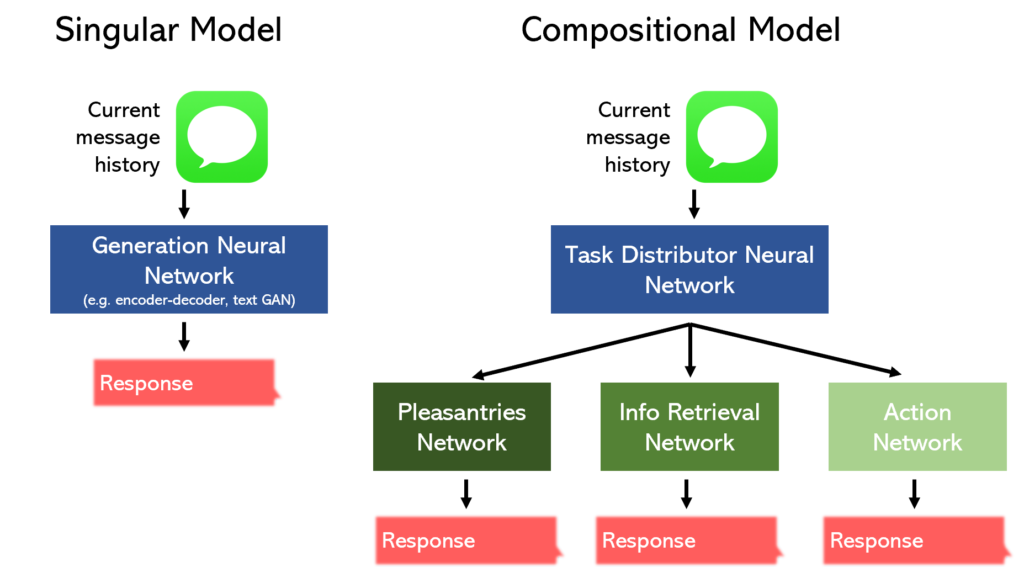

Compositional learning is based on the idea that one model can’t do it all. When deep neural networks were only used for one task — say, recognizing a malignant or benign cancer cell or classifying an image into dogs or cats — they could perform reasonably well. Unfortunately, something that has been observed in neural networks is that they can only do one thing very well. As applications for artificial intelligence are steadily growing more complex, singular neural networks can only grow larger and larger, accounting for the new complications with more neurons.

As discussed earlier, this continual ability to grow is reaching a dead end. By combining several of these neural networks to perform segments of the complete task, the model as a whole performs much better at these intricate tasks while maintaining reasonable computing space. When one task is broken down into several neural networks, each of the separate networks can specialize in their field, as opposed to needing to be all covered by one network. This is analogous to asking the President (or Prime Minister) to make a decision with or without support from Secretaries of Labor, Defense, Health, and other departments.

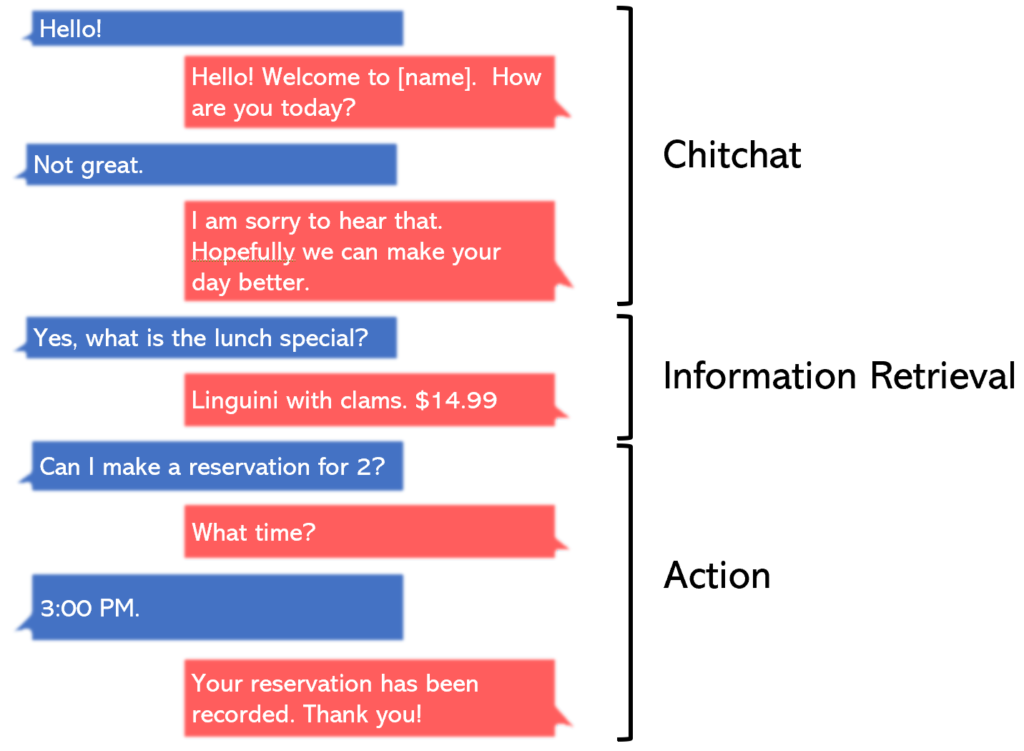

Consider, for instance, the following task: create a chatbot for an upscale restaurant that engages with the user and can perform common tasks like inquiring about the menu or making a reservation, as well as making idle chitchat.

The conversation can clearly be broken down into three sections: pleasantries and chitchat, information retrieval, and actions. Instead of having one machine learning model process that takes in the previous interactions and outputs a response, we can opt for a more distributed system:

One neural network infers what task is at hand — if the user is guiding the conversation expecting a pleasantry, information, or an action — and assigns the task to a specialized network. By using a distributed model instead of something more direct like an encoder-decoder network or a text GAN*, two benefits are realized:

Higher accuracy. Because the task is delegated to three separate models, each specializing in their own field, the performance of the model is improved.

Faster runtime. Although training distributed models are generally a more difficult process, distributed models are much faster when making predictions, something essential for projects that require quick responses. This is because the distributed model can be thought of as ‘splitting’ the singular model, so information only passes through useful neurons pertaining to the current task instead of needing to flow through the entire network.

*Encoder-decoder networks and GANs are comprised of multiple networks and can perhaps be thought of as compositional models themselves. In this context, they are considered singular only because the compositional model expands upon it to make it more effective. The compositional model structured described is more of a ‘composition-composition model’.

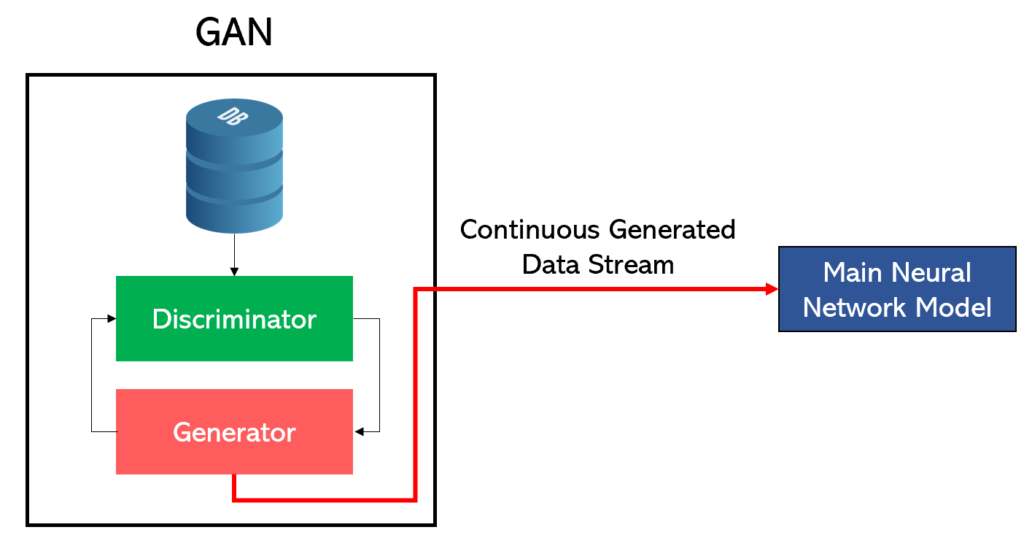

Or, consider the use of GANs (generative models) to replace traditional data augmentation methods, which are in many cases unfitting to the context and provide excessive, harmful noise. By continuously funneling new GAN-generated data into a model, two problems are solved:

Uneven class labels. A huge issue with data collection is that a model tends to make predictions with the same proportion as in the labels. If 75% of labels in a cat-dog dataset as ‘dog’, then the model will recommend ‘dog’ most of the time as well. By using GANs, additional images can be created to even out the class imbalance.

Overfitting. A problem that is normally solved with data augmentation, GANs provide a solution that performs better for a universal array of contexts. Distortions to, say, celebrity faces, may physically cause the image not to be connected to its class. On the other hand, GANs provide additional variation needed in overfitting and can more efficiently boost the effectiveness of model learning.

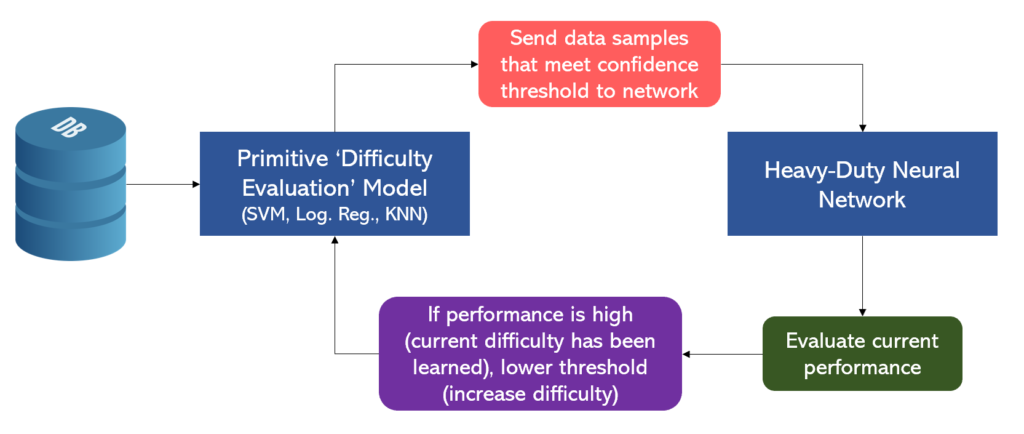

Or, for instance, consider a dual-model system that more constructively allows the model to learn easily learnable (ones the primitive difficulty evaluation model can solve with high confidence/probability) samples first and only introduces more difficult training samples after the deep neural net has mastered the previous ones.

This type of progressive-difficulty learning could be more effective than traditional methods of learning by establishing ground concepts first, then fine-tuning weights for more difficult data samples. This idea relies on compositional model frameworks, which consist of two or more sub-models linked by a logic flow.

It should be noted that compositional learning differentiates itself from ensemble methods because a) models in compositional learning perform different tasks and b) context-based relationships are a key part of compositional learning, which are not present in ensemble methods.

Perhaps the reason why compositional learning methods work so well is because our brains are compositional in nature as well. Each sector of our brain specializes in a specific task, and their signals are coordinated and aggregated to form a working, dynamic decision-maker.

Compositional learning is much more difficult than standard modelling, which entails choosing the right algorithm and prepping the data. In compositional systems, there are an infinite number of ways one could structure the relationships between each of the models, all dependent on context. In a way, constructing compositional learning models is an art. Determining what types of models to utilize and in what relationship requires additional coding, creative thinking, and a fundamental understanding of the nature of models, but is immensely rewarding when dealing with the complex problems AI will need to tackle in 2020 and the future.

The future of AI lies in compositional learning.

—

Fuente: Andre Ye